Company

reveals technology capable of increasing picture resolution 16-fold,

effectively restoring lost data – but results still an educated guess

Google’s neural networks have achieved the dream of CSI

viewers everywhere: the company has revealed a new AI system capable of

“enhancing” an eight-pixel square image, increasing the resolution

16-fold and effectively restoring lost data.

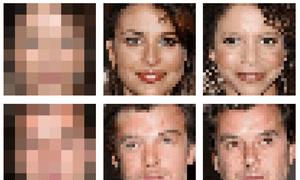

The neural network could be used to increase the resolution of

blurred or pixelated faces, in a way previously thought impossible; a

similar system was demonstrated for enhancing images of bedrooms, again

creating a 32x32 pixel image from an 8x8 one.

Google’s researchers describe

the neural network as “hallucinating” the extra information. The system

was trained by being shown innumerable images of faces, so that it

learns typical facial features. A second portion of the system,

meanwhile, focuses on comparing 8x8 pixel images with all the possible

32x32 pixel images they could be shrunken versions of.

The two networks working in harmony effectively redraw their best

guess of what the original facial image would be. The system allows for a

huge improvement over old-fashioned methods of up-sampling: where an

older system might simply look at a block of red in the middle of a

face, make it 16 times bigger and blur the edges, Google’s system is

capable of recognising it is likely to be a pair of lips, and draw the

image accordingly.

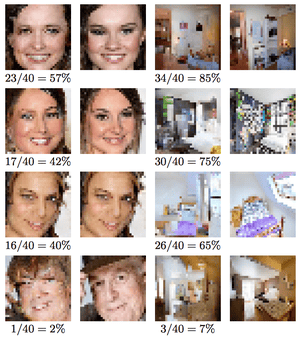

Of course, the system isn’t capable of magic. While it can make

educated guesses based on knowledge of what faces generally look like,

it sometimes won’t have enough information to redraw a face that is

recognisably the same person as the original image. And sometimes it

just plain screws up, creating inhuman monstrosities. Nontheless, the

system works well enough too fool people around 10% of the time, for

images of faces.

Running the same system on pictures of bedrooms is even better: test

subjects were unable to correctly pick the original image almost 30% of

the time. A score of 50% would indicate the system was creating images

indistinguishable from reality.

Although this system exists at the extreme end of image manipulation,

neural networks have also presented promising results for more

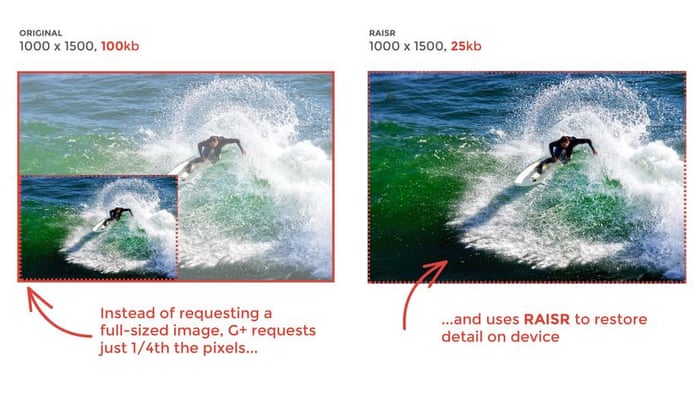

conventional compression purposes. In January, Google announced

it would use a machine learning-based approach to compress images on

Google+ four-fold, saving users bandwidth by limiting the amount of

information that needs to be sent. The system then makes the same sort

of educated guesses about what information lies “between” the pixels to

increase the resolution of the final picture.